I am using scrapy to fetch some resources, and I want to make it a cron job which can start every 30 minutes.

The cron job:

0,30 * * * * /home/us/jobs/run_scrapy.sh`

run_scrapy.sh:

#!/bin/sh

cd ~/spiders/goods

PATH=$PATH:/usr/local/bin

export PATH

pkill -f $(pgrep run_scrapy.sh | grep -v $$)

sleep 2s

scrapy crawl good

As the script shows I tried to kill the script process and the child process (scrapy) also.

However when I tried running two instances of the script, the newer instance does not kill the older one.

How to fix that?

Update:

I have more than one .sh scrapy script which run at different frequency configured in cron.

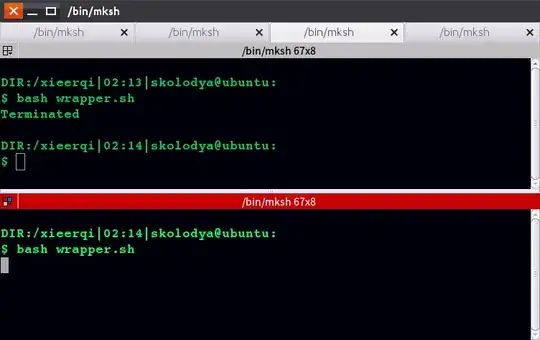

Update 2 - Test for Serg's answer:

All the cron jobs have been stopped before I run the test.

Then I open three terminal windows say they are named w1 w2 and w3, and run the commands in the following orders:

Run `pgrep scrapy` in w3, which print none.(means no scrapy running at the moment).

Run ./scrapy_wrapper.sh in w1

Run pgrep scrapy in w3 which print one process id say it is 1234(means scrapy have been started by the script)

Run ./scrapy_wrapper.sh in w2 #check the w1 and found the script have been terminated.

Run pgrep scrapy in w3 which print two process id 1234 and 5678

Press <kbd>Ctrl</kbd>+<kbd>C</kbd> in w2 (twice)

Run pgrep scrapy in w3 which print one process id 1234 (means scrapy of 5678 have been stopped)

At this moment, I have to use pkill scrapy to stop scrapy with id of 1234