I'm trying to download all the images of a website

here is the website :

https://wall.alphacoders.com/by_sub_category.php?id=173173&name=Naruto+Wallpapers

I tried:

wget -nd -r -P /home/Pictures/ -A jpeg,jpg,bmp,gif,png https://wall.alphacoders.com/by_sub_category.php?id=173173&name=Naruto+Wallpapers

s

But it doesn't download the images

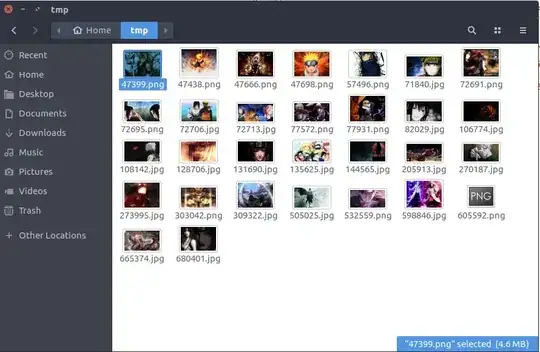

result

HTTP request sent, awaiting response... 200 OK Length: unspecified [text/html] /home/Pictures: Permission denied/home/Pictures/by_sub_category.php?id=173173: No such file or directory

Cannot write to ‘/home/Pictures/by_sub_category.php?id=173173’ (No such file or directory).