August 12, 2019

Today I heard of a Leather power recliner rocker swivel chair I missed at half price!

I've decided to write a YAD / Bash / wget application to have cron download the html file daily and compare the price:

- When the price changes

cronwill email the me. - If it can't find the price (vendor changed website format), cron will email me an error message.

- If vendor changed the item number, or it can't be found, cron will also email me.

I'll post an answer when done. Google will steal it later :)

September 24, 2017 post left for historical reference

I need to buy a specific laptop when it goes on sale.

This gaming laptop is the best I've found after a week of cost / benefit analysis. I decided to buy new after searching on Kijjii where a used Laptop too good to be true but accepting Paypal refused to answer simple questions.

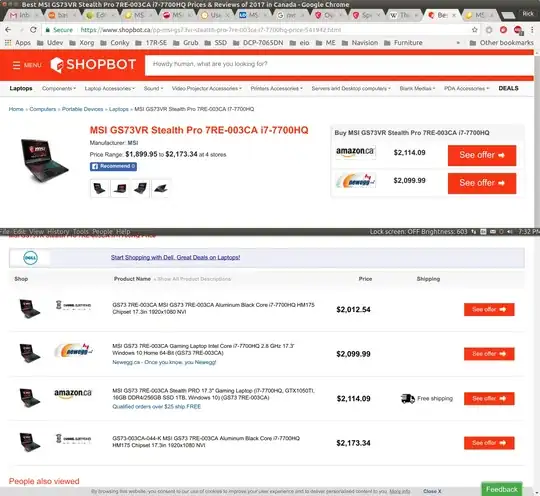

The traditional method of a "shop bot" doesn't give acceptable results:

It throws Amazon price out there at $2114 then shows NewEgg at $2099 which is the same price as all the local retailers. Then it lists four specific sites none of which are acceptable.

I've found these retailers selling the same laptop model for $2099.99: Staples, London Drugs, CDW Canada (Granted $15 cheaper but not local), Newegg.ca (Not local but well known), Canada Computers, Grand and Toy (Actually $30 more but on sale now for $120 less until Sept 15, 2017), BestBuy.ca (Actually $50 more but known for fantastic spontaneous sales), TigerDirect.ca (Actually $150 less but not local and not well known), Memory Express (Actually $200 more but I like the company and can they have fantastic sales like 75% off a Sony Subwoofer) and there are probably more out there I haven't found yet.

I don't want to pay $2100 and I think some day someone will list it for $1400 but then it will sell out in a week. It might be Boxing Day, it might be sooner or it might be later but it will happen and I'm the kind that can play the long game.

How can I get cron to look at these links every day, search for a $ sign and e-mail me when the price has changed from the day before?

I don't have time to check 10+ websites every day to check prices. Plus I don't actually have the acumen or fortitude to do that. Just look how it took 3 months to update this question!

If there aren't any off-the-shelf-open-source solutions out there I'll likely write it myself in bash. (Sorry to all the Python lovers out there).

Original post from June 27, 2017 left for historical reference

I bought an IKEA ARKELSTORP coffee table last year and now want to buy a matching console table. Yesterday I sadly discovered the line has been discontinued and only four desks are available in Edmonton which I don't need. I went on kijijii only to discover someone was selling a used console table on June 2nd and I lost out.

Is there a way to have cron call google search every day and save the results returned on the first page?

I did a little home work and found this "poor man's cron" but it uses google script apps and is overly complicated for my needs.

I envision doing a search manually one time and saving the first page results. From then on cron can run the search daily and email me if new results are found. cron would run diff on it's results compared to my saved base line results and email me when different.

As I will probably search for more than one thing I would setup a bash array (using yad) containing the search string, the base-line manual search results file name and a counter for cron to update number of times searched.

Any and all partial answers on given parts of this project happily up-voted.

For those proposing solutions in C I'm sad to say I'm only just beginning to wrap my mind around it with the statx command and could only compile complete code and not "tweak it" to my needs. As such shell / bash code is the best for a Linux neophyte like me.