I just had an argument with a colleague of mine and thought I'd just reach out to the experts on this. Here's the scenario. We were using a website that measures your connection's speed. We tested using a server that is far from us (We are in Malaysia and the server was in US). It was around 2 Mbps. Then we tried with a server in Singapore and it was much faster (around 15 Mbps). My colleague believed it's because of the physical distance while I don't think it matters. My understanding is once you have done the initial handshake and the data flow has started, it doesn't matter where the server is located and the result should be almost the same. Am I missing something here? How does it really work?

-

2You can confirm this yourself trivially. Ping the server to acquire latency. Then 2Mbps*Latency==Window. You can confirm actual window size with wireshark. But let's assume you don't have window scaling on, then it's 64kB/2Mbps = 256ms, so I predict your server to be 256ms away. – ytti Jul 11 '13 at 06:26

-

2@ytti is indirectly describing the BDP (bandwidth-delay product) which roughly translates into long (delay), fat (bandwidth) networks being more difficult to keep full and anything less eats away from your potential throughput. See http://en.wikipedia.org/wiki/Bandwidth-delay_product. – generalnetworkerror Jul 11 '13 at 08:26

-

2@ytti, Windows Vista and later have window scaling on by default... we need to know what OS Navid is using for the test – Mike Pennington Jul 11 '13 at 09:25

-

According to this http://support.microsoft.com/kb/934430 scaling (factor 8) is default in Vista, but only for non-HTTP. I'm not Window user myself so can't verify. – ytti Jul 11 '13 at 09:43

-

2@ytti, I'm not sure that's relevant. I run Vista, and I'm looking at a sniff of my HTTP connection to that support page, and the TCP SYN says: "Window Scale: 2 (Multiply by a factor of 4)" – Mike Pennington Jul 11 '13 at 09:50

-

What is your 'netsh int tcp show heurestics' on corporate Windows 2008 terminal server I see it 'disabled'. There is hot-fix to turn it on (runs 'netsh int tcp set heuristics enabled'). I'd guess you have it enabled? Friend just reported it disabled on his Windows8 as well. – ytti Jul 11 '13 at 09:55

-

`netsh interface tcp show heuristics`: `Window Scaling heuristics: enabled` – Mike Pennington Jul 11 '13 at 10:10

-

So it turns out this option has no impact on firefox, window scaling is on. But MSIE and if this option is disabled, no scaling in windows7, enable it+reboot and scaling in windows7 in MSIE. – ytti Jul 11 '13 at 10:23

-

Did any answer help you? If so, you should accept the answer so that the question doesn't keep popping up forever, looking for an answer. Alternatively, you can post and accept your own answer. – Ron Maupin Jan 03 '21 at 01:37

4 Answers

My colleague believed it's because of the physical distance while I don't think it matters. My understanding is once you have done the initial handshake and the data flow has started, it doesn't matter where the server is located and the result should be almost the same. Am I missing something here? How does it really work?

Both of you were right at some point in history, but your understanding is mostly correct... today :). There are a few factors that changed between the older answer your friend gave, and the capabilities we have today.

- TCP Window Scaling

- Host buffer tuning

The difference in the results you saw could have been affected by:

- Packet loss

- Parallel TCP transfers

TCP Window Scaling: The bandwidth-delay effect

As your friend mentioned, older implementations of TCP suffered from the limits imposed by the original 16-bit receive window size in the TCP header (ref RFC 793: Section 3.1); RWIN controls how much unacknowledged data can be waiting in a single TCP socket. 16-bit RWIN values limited internet paths with high bandwidth-delay products (and many of today's high bandwidth internet connections would be limited by a 16-bit value).

For high RTT values, it's helpful to have a very large RWIN. For example, if your path RTT from Malaysia to the US is about 200ms, the original TCP RWIN would limit you to 2.6Mbps.

Throughputmax = Rcv_Win / RTT

Throughputmax = 655358 / 0.200*

Throughputmax = 2.6Mbps

RFC 1323 defined several very useful TCP additions which helped overcome these limitations; one of those RFC 1323 TCP Options is "window scaling". It introduces a scale factor, which multiplies the original RWIN value, in order to get the full receive window value; using window scaling options allow a maximum RWIN of 1073725440 bytes. Applying the same calculations:

Throughputmax = Rcv_Win / RTT

Throughputmax = 10737254408 / 0.200*

Throughputmax = 42.96Gbps

Keep in mind that TCP increases RWIN gradually over the duration of a transfer, as long as packet loss isn't a problem. To see really large transfer rates over a high-delay connection, you have to transfer a large file (so TCP has time to increase the window) and packet loss can't be a problem for the connection.

Packet Loss

Several years ago, internet circuits across the Pacific Ocean got pretty congested at times. I used to run video chats overseas during some of these high congestion / packet loss conditions... Even modest packet loss (such as 0.5% loss) can slow a high-bandwidth connection down.

It's also worth saying that not all TCP packet drops are bad... several TCP implementations intentionally drop packets as an endpoint-to-endpoint feedback signal. I mentioned above that TCP gradually opens the RWIN over time (as long as packet loss is not a problem). When a congested circuit drops a packet, TCP responds to the drops by slowing the connection down (and spread across enough TCP sockets, TCP's response helps mitigate circuit congestion). However, this kind of bulk congestion TCP tail drop behavior has some drawbacks... TCP sockets in this condition can significantly throttle back throughput (via CWND - congestion window) when faced with TCP tail drop (from circuit congestion).

Also related to the subject of intentional packet drops is Random Early Detection (RED). This is a great feature to enable in your TCP traffic queues when you enable QoS / HQoS on your packet queues. It works by looking at the interface queue and randomly dropping a packet. The packet drops (on TCP connections) will slow sockets down; and it's widely considered to be a much better alternative than tail-dropping TCP. It's also worth noting that this feature only works on TCP... as you may be aware, many (non-multimedia) UDP protocols do not slow down in the face of packet loss.

Parallel TCP streams

FYI, some speed test websites use parallel TCP streams to increase throughput; this may be affecting the results you see, because parallel TCP streams dramatically increase throughput in case you have some packet-loss in the path. I have seen four parallel TCP streams completely saturate a 5Mbps cable modem that suffered from 1% constant packet loss. Normally 1% loss would lower the throughput of a single TCP stream.

Bonus Material: Host Buffer tuning

Many older OS implementations had sockets with limited buffers; with older OS (like Windows 2000), it didn't matter whether TCP allowed large amounts of data to be in-flight... their socket buffers were not tuned to take advantage of the large RWIN. There was a lot of research done to enable high performance on TCP transfers. Modern operating systems (for this answer, we can call Windows Vista and later "modern") include better buffer allocation mechanisms in their socket buffer implementations.

- 29,876

- 11

- 78

- 152

-

5As a side note: there are plenty of old-style routers that will barf on window scaling (there are fewer every day, but there are still plenty) and reset it to zero, decreasing your bandwidth drastically. The probability of hitting one of these broken routers increases with the number of hops to the destination, although most network infrastructure providers should not have this problem nowadays. – Chris Down Jul 11 '13 at 10:59

-

1Routers are L3 devices. TCP window scaling is a L4 process. The router either forwards the packet or it doesn't and, barring the use of QoS mechanisms, there's no differentiation between TCP, UDP or any other protocol. Routers certainly have an effect on initial MSS negotiation (..or do if they drop ICMP unreachables) but the sliding window algorithm is purely a function of the stacks on end systems. – rnxrx Jul 12 '13 at 03:08

-

3@rnxrx I'd agree, it would be mostly edge FW which would get angry about TCP options. I've not heard of router mangling TCP window scaling option, but I wouldn't be terribly surprised if someone came up with vendor/model which has done it, considering that it's not rare for edge routers to mangle TCP MSS option in-transit, it's not that big leap to imagine someone fscking that up. – ytti Jul 12 '13 at 06:06

Short answer: Yes, distance has an effect on single stream bandwidth.

The internet has evolved means of limiting that effect... delayed ACK, window scaling, other protocols :-) But physics still wins in the end. In this case, it's much more likely to be general network congestion over so many hops -- it only takes a single dropped packet to kill a TCP stream.

- 31,438

- 2

- 43

- 84

While there already are excellent answers to this, I'd like to add: no, speed is not necessarily affected by distance and yes, very often speed is affected by distance are both true.

Why is that?

Strongly simplified, the longer the distance, the more "hops" are involved on the way through the Internet. The maximum bandwidth is determined by the slowest hop and concurring traffic. With increasing distance and a somewhat random distribution of hop speeds, the probability for getting slower overall speeds is increasing. Additionally, physics gets in the way and increasing latency may also slow down the link.

But this is not to be taken for granted. Technology allows us to build a planet-encompassing connection of nearly any desired bandwidth. However, bandwidth and distance are enemies and both dramatically increase the cost of the connection, again making it less likely to exist just for the connection you might need right now.

Of course, this is oversimplified but in reality, this situation is what you very often find. And then again you don't when there's a surprisingly fast connection or a distribution proxy just around the corner - but when everything's instant we rarely think about the speed of the Internet...

- 81,287

- 3

- 67

- 131

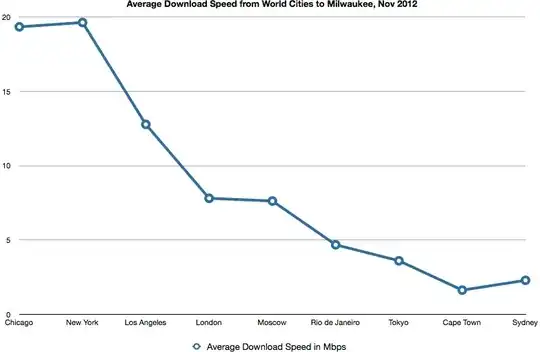

Yes, here's real word data, not just theory.

Source: Average Download Speed From World Cities, Andrew Martin

- 123

- 2

-

Link only answers are discouraged. Please provide details which make this answer useful without depending on the linked webpage. – Teun Vink Aug 11 '17 at 05:05

-

-

1I'm not your dude. Also, this image has no meaning without an explanation what's on the Y-axis and how it was measured. And even then, you should explain how this image is an answer to the question. – Teun Vink Aug 12 '17 at 21:43

-

I don't know why it's difficult for you, but the X and Y axis are labeled. "Average Download Speed in Mbps" – Jonathan Aug 16 '18 at 23:29