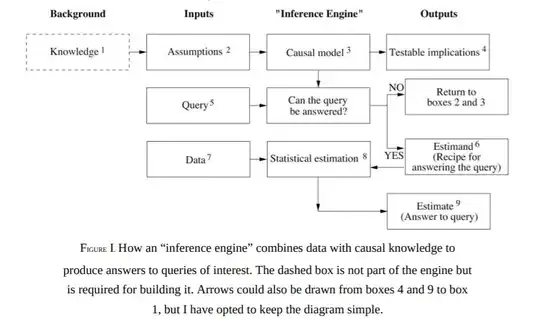

I am reading The Book of Why: The New Science of Cause and Effect by Judea Pearl, and in page 12 I see the following diagram.

The box on the right side of box 5 "Can the query be answered?" is located before box 6 and box 9 which are the processes to actually answer the question. I thought that means that telling if we can answer a question would be easier than actually answering it.

Questions

- Do we need less information to tell if we can answer a problem (epistemic uncertainty) than actually answer it?

- Do we need to try to answer the problem and then realize that we cannot answer it?

- Do we answer the problem and at the same time provide an uncertainty estimation?