I have a desktop with Ubuntu installed on an SSD. Can I add 4 more drives and put them in RAID 5 (turn it into a NAS) without reinstalling? If so how? Thanks.

2 Answers

You can create a raid5 array with the additional disks using the "mdadm" package. Check How To Create Raid Arrays with mdadm

- 381

-

Your answer led me to webmin which made creating the RAID really easy. – Lysandus Oct 21 '17 at 13:04

I wanted to create a RAID 0 array for a high performance video transcoding workstation that I built utilizing a pair of M2 PCIE SSD drives. Everything I had found recommended installing Ubuntu server in order to accomplish this. I didn't think that was necessary for my use case.

I had originally setup the system for dual boot WIN10 and Ubuntu using one drive for Windows 10 and the other for Ubuntu 16.04. UEFI was giving me fits so I enabled CSM in the BIOS and attempted Legacy installations of both OS. I shrank both installations (and moved the swap partition) to leave roughly 1/2 the space on each drive (55.9G in my case) unallocated for my planned RAID 0 volume.

I utilized fdisk to create the necessary partitions of type fd on both drives in the remaining space.

Here's the end result on the Windows drive:

Device Boot Start End Sectors Size Id Type

/dev/nvme0n1p1 * 2048 1126399 1124352 549M 7 HPFS/NTFS/exFAT

/dev/nvme0n1p2 1126400 117221375 116094976 55.4G 7 HPFS/NTFS/exFAT

/dev/nvme0n1p3 117221376 234441647 117220272 55.9G fd Linux raid autodetect

and the Ubuntu drive:

Device Start End Sectors Size Type

/dev/nvme1n1p1 2048 4095 2048 1M BIOS boot

/dev/nvme1n1p2 4096 100247551 100243456 47.8G Linux filesystem

/dev/nvme1n1p3 100247552 117221375 16973824 8.1G Linux swap

/dev/nvme1n1p4 117221376 234441614 117220239 55.9G Linux RAID

I installed mdadm with sudo apt install mdadm and then configured.

I checked that both drives were detected and partitioned properly with lsblk

nvme0n1 259:0 0 111.8G 0 disk

├─nvme0n1p1 259:3 0 549M 0 part

├─nvme0n1p2 259:10 0 55.4G 0 part

└─nvme0n1p3 259:11 0 55.9G 0 part

nvme1n1 259:1 0 111.8G 0 disk

├─nvme1n1p1 259:4 0 1M 0 part

├─nvme1n1p2 259:5 0 47.8G 0 part /

├─nvme1n1p3 259:6 0 8.1G 0 part

└─nvme1n1p4 259:2 0 55.9G 0 part

I created the Array with the command `sudo mdadm -C /dev/md0 -l raid0 -n 2 /dev/nvme0n1p3 /dev/nvme1n1p4

I checked the status with

:~$ cat /proc/mdstat

Personalities : [raid0]

md0 : active raid0 nvme1n1p4[1] nvme0n1p3[0]

117153792 blocks super 1.2 512k chunks

unused devices: <none>

mdadm --detail /dev/md0 provides more detailed info:

/dev/md0:

Version : 1.2

Creation Time : Tue Sep 11 10:31:25 2018

Raid Level : raid0

Array Size : 117153792 (111.73 GiB 119.97 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Tue Sep 11 10:31:25 2018

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Chunk Size : 512K

Name : me-Z370-HD3P:0 (local to host me-Z370-HD3P)

UUID : bd71c1dd:2eb9fbd6:66204362:dcf71a05

Events : 0

Number Major Minor RaidDevice State

0 259 11 0 active sync /dev/nvme0n1p3

1 259 2 1 active sync /dev/nvme1n1p4

Made the filesystem with mkfs.ext4 /dev/md0 and mounted it with

sudo -i

# mkdir /mnt/raid0

# mount /dev/md0 /mnt/raid0/

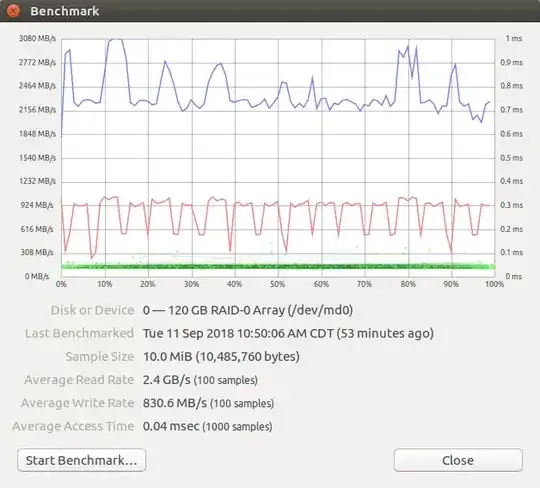

All that remains is to benchmark it:

`

and create an fstab entry to automount it at at startup.

`

and create an fstab entry to automount it at at startup.

- 36,023

- 25

- 98

- 183

ddthe data off your drive, format it for the raid array &ddthe data back. if you plan on using software raid you'll have to make changes anyway; if using hardware raid this will be outside of software but your hardware will want to re-format anyway. raid5 will mean different data is stored on your ssd anyway (redudancy & only part of your data) so you're creating more work for yourself than a clean install. i'd recommend a clean install, then restore the settings & data and if you're using as a NAS forget the desktop for speed. – guiverc Oct 17 '17 at 01:42